The Language of Tears

眼泪的语言

Jan/2019 (created in May 2018)

Xu made a tear machine that pushes out small amounts of liquid (tears) based on the facial expressions in "I dreamed a dream" from "Les Miserables". The main technique that she used was Machine Learning. Based on her own "normal" or "cry" labeled images, if the current frame of the actress' facial expression in the video is classified as "cry", it will trigger the dummy head drops water which can be seen as crying.

PROCESS:

My project focused on the humanized extreme emotion carrier--tears and how tears can be defined as a language to build communication between human and machines.

For the psychological perspective of a human’s tears, people like to have sad tears when seeing something have in common with their experiences. Also, people like to weep when watching somebody’s weep in front of them which could always trigger the sympathies in their heart. People like to mimic the other person’s facial expression when communication. The emotions really communicate with people and influence other people’s feelings. I want to focus on these humanized interaction and build the emotional link between human and machines.

Machines may not have real emotions themselves but AI can help machines learn and do what humans teach them to do. I admit emotions are unpredictable and work as a key feature of being human. But the human facial expression, the physical situation always can trace a human’s emotion. For instance, we can get a conclusion whether one person is crying when seeing the facial expression of that human, or two light moving water traces emerging under the eyes. So does AI. They learn what kind of data expresses people’s emotions, like facial expressions. AI could learn and mimic human’s reaction based on what we have on our face.

My project focused on the humanized extreme emotion carrier--tears and how tears can be defined as a language to build communication between human and machines.

For the psychological perspective of a human’s tears, people like to have sad tears when seeing something have in common with their experiences. Also, people like to weep when watching somebody’s weep in front of them which could always trigger the sympathies in their heart. People like to mimic the other person’s facial expression when communication. The emotions really communicate with people and influence other people’s feelings. I want to focus on these humanized interaction and build the emotional link between human and machines.

Machines may not have real emotions themselves but AI can help machines learn and do what humans teach them to do. I admit emotions are unpredictable and work as a key feature of being human. But the human facial expression, the physical situation always can trace a human’s emotion. For instance, we can get a conclusion whether one person is crying when seeing the facial expression of that human, or two light moving water traces emerging under the eyes. So does AI. They learn what kind of data expresses people’s emotions, like facial expressions. AI could learn and mimic human’s reaction based on what we have on our face.

这个作品是我的第一个艺术装置,也是第一次完整的把机器学习技术运用到艺术作品中。这个作品利用了人的极端的情感载体--眼泪。然后怎么将眼泪定义为一种语言来构建人类和机器之间的情感沟通。这个作品希望通过眼泪的意向来寻求一种沟通中诗意的表达。

从心理学的角度来看,人们流悲伤的眼泪其中一个原因是他们看到和他们类似的悲 伤的经历;或者其他人在他们面前哭泣触 发了他们心底的悲悯与同情从而流泪。在沟通中, 人们往往喜欢模仿他人的面部 表情。对于机器来说,它们没有情感,但人工智能可以帮助机器去学习和做人类教 他们做的事。我承认情绪是很难预测和分 析的,但是人的部分情感是可以通过面部 的五官变化传递信息的,这些信息可以是数据,一种机器能够理解的方式。例如, 我们判断一个人是否在哭或哭过,可以看 他当下的表情,或者观察他眼下是否有泪痕。这些判断AI也可以做到。通过让机器 去学习大量的易于区分的表情图片,它就 可以预测新数据中的人物表情。例如我的 这个作品,我先让机器学习了大量的哭泣 和正常表情的照片,根据这些照片机器建 立学习模型,然后我给另外一个人的一组照片,它就会知道哪一张是哭泣,哪一张是正常表情。我就是通过这个技术完成了我的作品,然后根据机器学习给出的结果控制模型的眼泪。

这个作品是以一个现场实时表演 的方式呈现。这个模拟的人头面对着电影《悲惨世界》的片段《I dreamed a dream》会根据所看的内容而流泪。

I made a Tears machine which helps by an online open source (Syringe Pump). This machine pushes out small, accurate amounts of liquid which helps the water dropping more similar than the real tears from human. The tears need to be more controllable, so this machine can smoothly control the water from forward and backward by using Arduino. Helped by machine learning, if the current output image in the video is classified as cry, then it will triggers the syringe from the tears machine to push. So that the dummy head drops water which can be see as crying.

眼泪机器: 灵感来源于网络上一个医疗为用途开放资源。水放入针管里面,机器可以移动针管的抽拉部分,从而可以推出像眼泪一样少量的液体。深蓝色的部件是3D打印完成。

For general machine learning steps, I first need to get training data to train the network. I took pictures of myself with crying and normal images. Each of them was around 2000 images. These were the training data. The main technique used in my machine learning part is called CNN(Convolutional Neural Network), it is a widely used technique in Deep Learning. By studying given dataset and corresponding labels (cry or not) the network is able to learn the underlying pattern of an image regarding if there is any characteristic of a crying person, for example, the shape of the mouth or shape of the eyes. The code was adapted from here.

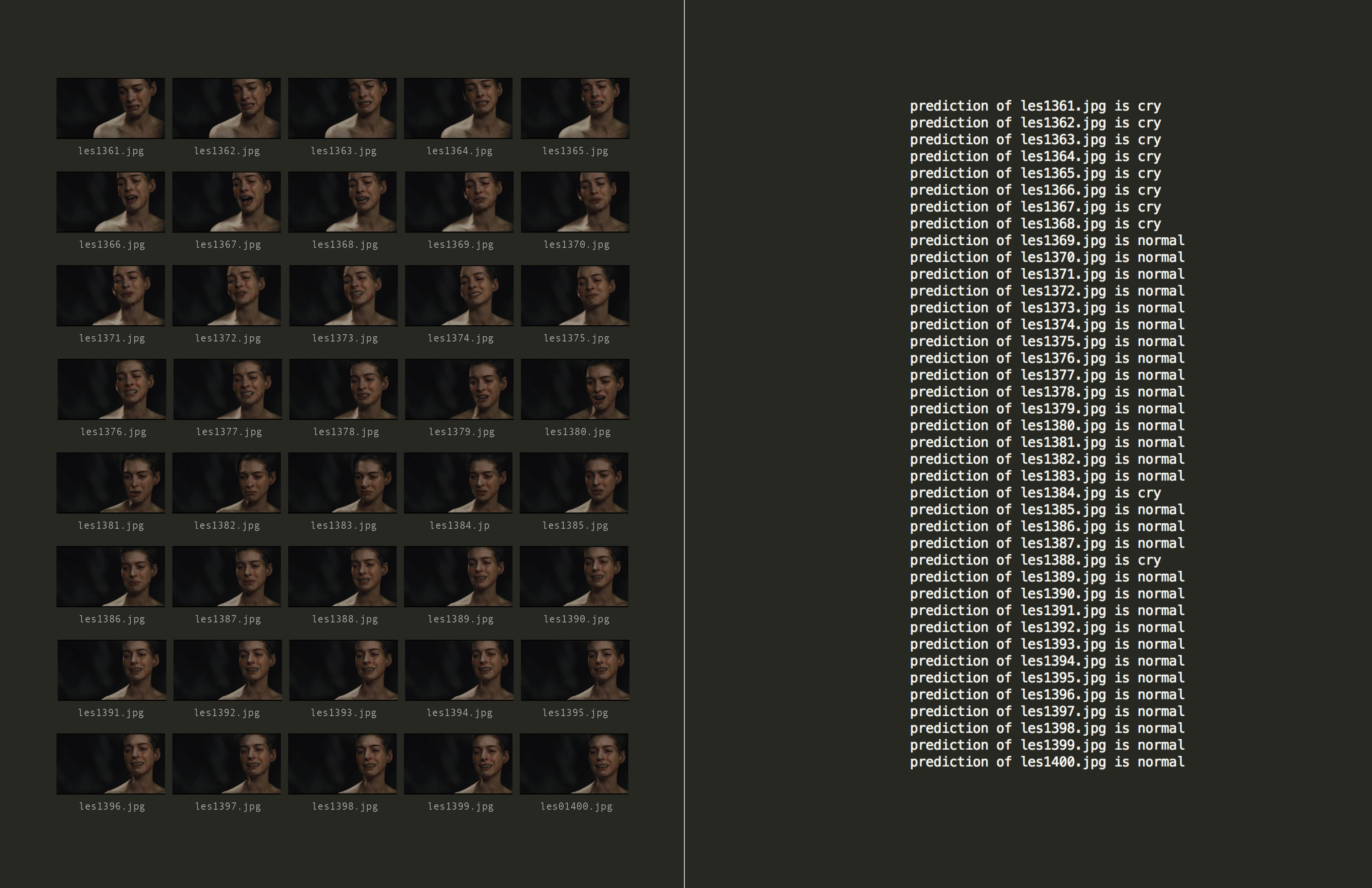

Then based on the model that I have. I have a new set of data in order to see whether the training model is accurate. I chose a section of the movie Les Miserables which is a song called I dreamed a dream. This is a super emotional song which the actress cried and sang at the same time. I want to use this song as my final new-data in order to express the idea of how my facial classification read the movie. I saved each frame inside the movie and run them through the model that I had. The result showed which images were crying, which images were normal.

Then based on the model that I have. I have a new set of data in order to see whether the training model is accurate. I chose a section of the movie Les Miserables which is a song called I dreamed a dream. This is a super emotional song which the actress cried and sang at the same time. I want to use this song as my final new-data in order to express the idea of how my facial classification read the movie. I saved each frame inside the movie and run them through the model that I had. The result showed which images were crying, which images were normal.

这个作品主要的技术是运用“机器学习”

。运用“机器学习”首先需要提供训练的大量的数据。为了得到这些数据,我自拍了2000张我的哭泣的照片和2000

张我正常表情的照片,机器学习技术可以学习哭的照片和不哭的照片有什么区别,

然后可以根据学习的结果进行预测。下一步,我把《悲惨世界》中的歌曲《我梦到了一个梦》(“I dreamed a dream”)

的视频的每一帧存取下来作为我的测试数据,根据我之前哭和不哭的学习结果可以

学习《悲惨世界》中女主角的表情是哭还是不哭。如果测试结果是“哭”就会触发我的眼泪机器,所以那个模型就会哭。也就是说当电影里的女演员在哭的时候,AI机器人检测到了,它也跟着一起留眼泪。

For the setting of the project, the dummy head

faced to the TV. Between TV and dummy head

was the tears machine.

When the actress in the movie was crying, AI robot detected it and cried as well.

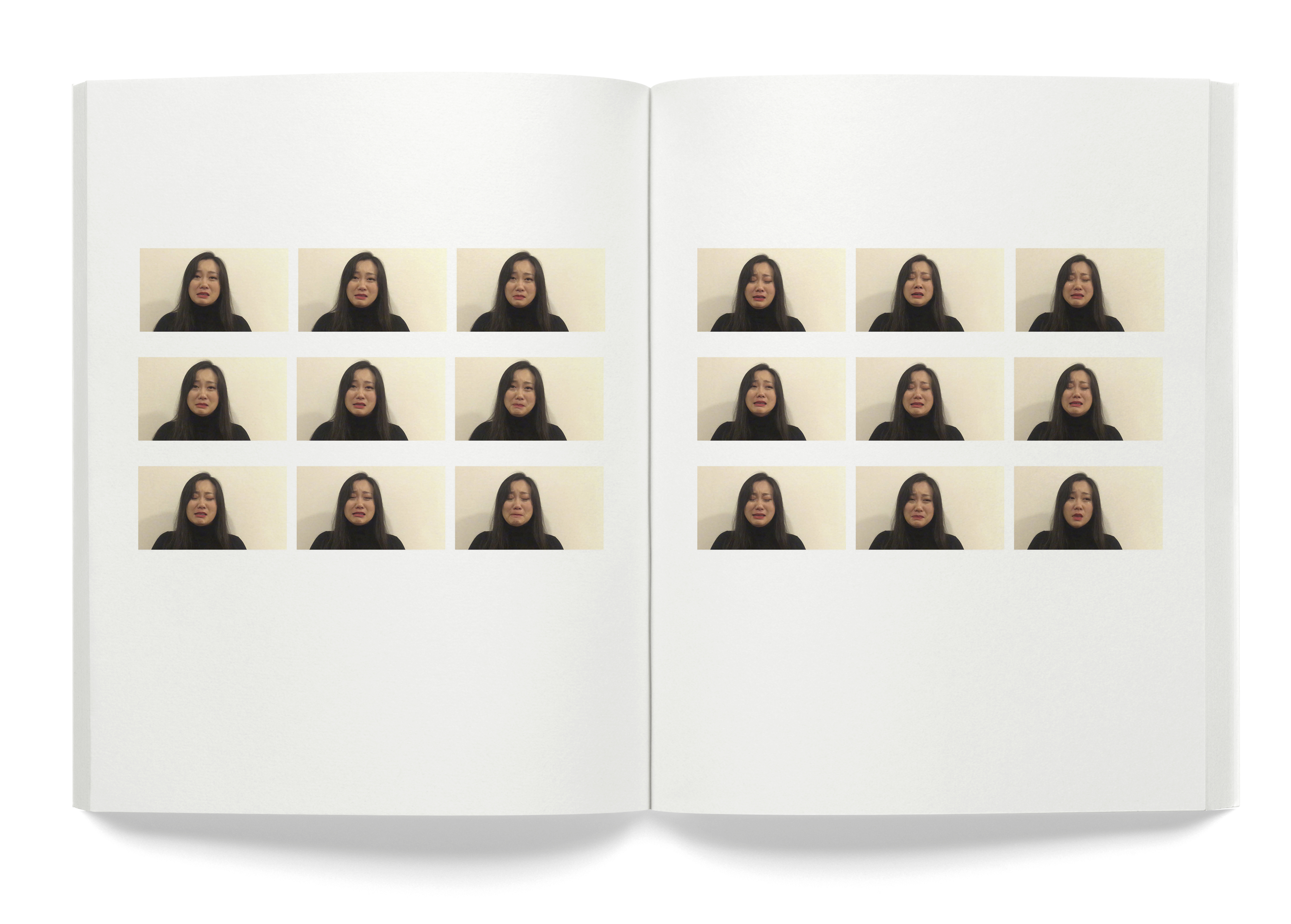

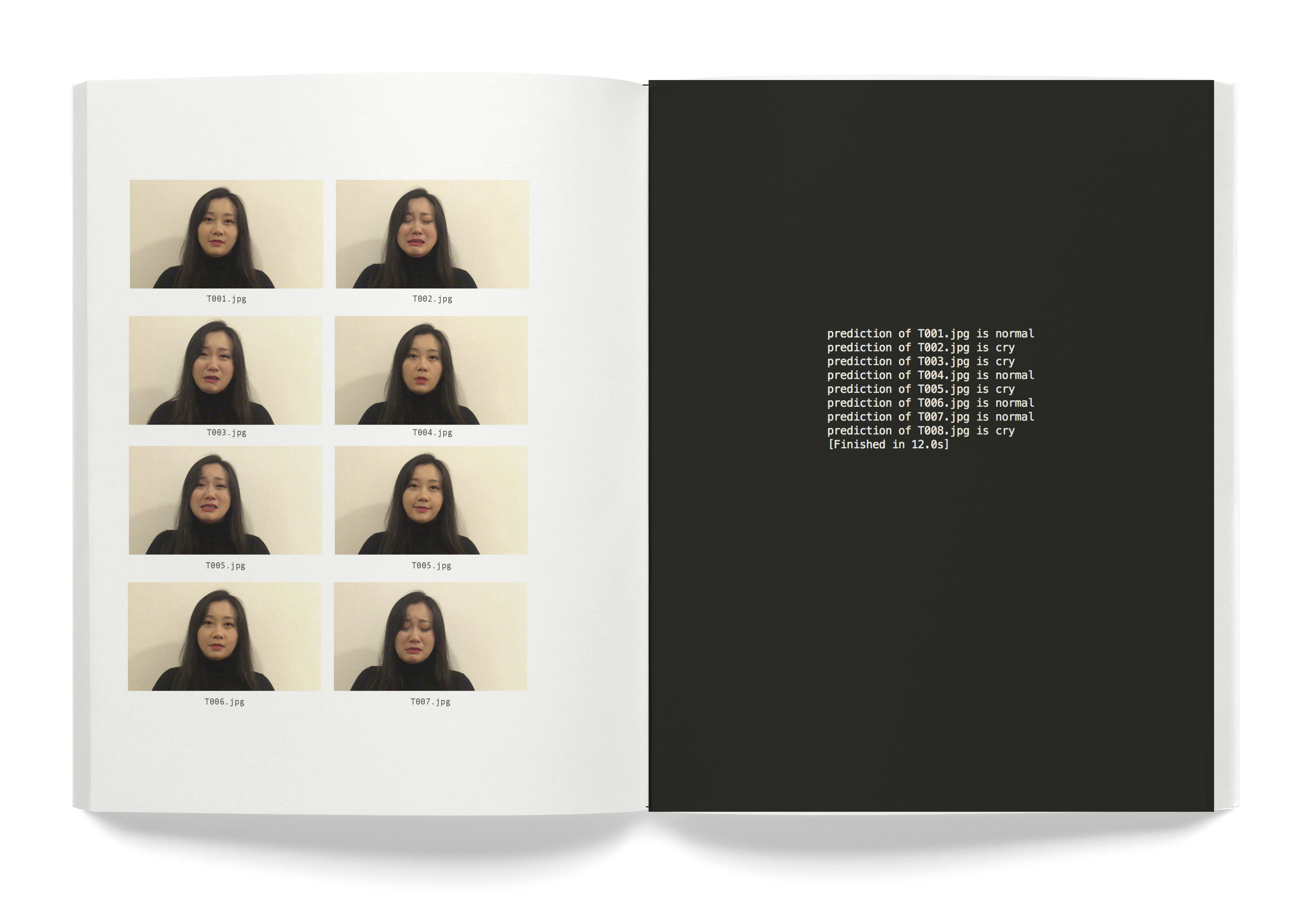

I made two books as one of my documentations. One was Prediction showed each frame in the movie and how this image be seen in the code. The other one is Training, based on the model of Prediction, how computer seeing my facial expressions.

When the actress in the movie was crying, AI robot detected it and cried as well.

I made two books as one of my documentations. One was Prediction showed each frame in the movie and how this image be seen in the code. The other one is Training, based on the model of Prediction, how computer seeing my facial expressions.

Training Data Documentation

My ‘Crying’ Data Sets Examples

My ‘Normal’ Data Sets Examples

Test in my model--which picture is “ normal”, which is “cry”

Prediction Data Documentation

Each frame run through the model that I had. The result showed which images were crying, which images were normal.

When the actress in the movie is crying, the AI robot detects it and cries as well.

I also made a sound piece called The Sound of Tears based on how the tears are dropped on a foil steam pan. The sound of dropping tears and the sound from motor and syringe pump also could indicate the sound of machine crying which echo my topic about language.

Sound of tears

© Copyright Xu Han 2020